Building a Custom Desktop AI Assistant for Wealth Management

| ROI | Retrieval Speed | Research Efficiency | Data Security |

|---|---|---|---|

| 280% | < 1 Second | +90% | Enterprise Grade |

The Technical Moat: RAG Engineering at Scale

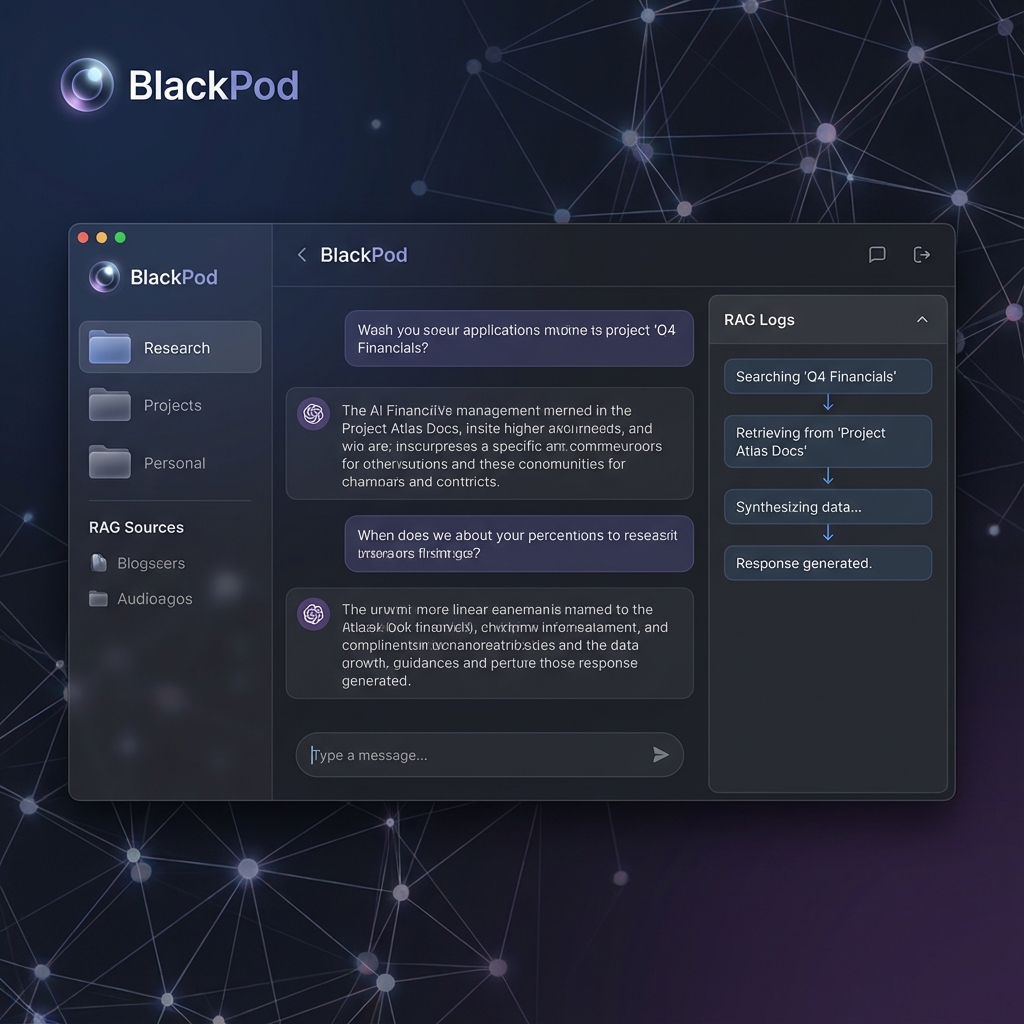

Most AI assistants hallucinate because they lack Contextual Grounding. We built BlackPod using a high-precision RAG (Retrieval-Augmented Generation) pipeline that locks the AI to your private datasets.

The Technical Stack

- Frontend Framework: Python (Kivy) with custom shaders for a premium UI/UX.

- Reasoning Engine: OpenAI GPT-4o-mini (optimized for speed/cost).

- Vector Database: Pinecone (Serverless) for sub-second semantic search.

- Orchestration: LangChain for complex retrieval chains.

- Database: MongoDB for persistent metadata and audit logs.

Business Value & ROI Breakdown

For Blackstone Financial Services, we converted latent data into an active competitive advantage.

- Pilot Launch (4 Weeks): £25,000 for core RAG infrastructure and document ingestion.

- Full Integration (12 Weeks): £85,000 total investment including multi-device sync and custom encryption.

- Efficiency Gain: Reclaimed 12 hours/week per junior analyst, resulting in an annual productivity bonus of over £200,000 across the firm.

Project FAQ (SEO Schema)

Situation: The "Data Grave" Problem in High-Finance

Blackstone Financial Services, a boutique wealth management firm, possessed a competitive advantage that was also their biggest hurdle: a massive, proprietary library of over 10,000 research reports, market analyses, and client case files.

However, the "Cost of Search" was eroding their margins:

- Fragmented Knowledge: Senior advisors held critical info in siloed folders, while junior analysts spent 12+ hours a week just looking for "that one 2022 report" on emerging market volatility.

- The Hallucination Risk: Early experiments with generic AI tools like ChatGPT were rejected because they couldn't access the firm's private data, leading to inaccurate market summaries and compliance risks.

- The Information Lag: When a client called with a complex question about a 3-year-old portfolio decision, the advisor had to "get back to them," killing the client's confidence and slowing down decision-making.

The goal was clear: Build a Local-First, Cloud-Augmented AI Assistant that provides instant, verified answers based solely on the firm's internal intelligence.

Technical Solution: The RAG-Driven Desktop Architecture

We chose to build BlackPod, a standalone desktop application that acts as a "second brain" for every advisor.

Action: Engineering the "Second Brain"

The build focused on two core pillars: Precision and User Experience.

1. The Multi-Source Ingestion Pipeline

Most RAG systems fail because of "bad data in." We built a sophisticated data_pipeline.py that:

- Dynamic Document Processing: Uses

PyMuPDFandpython-docxto extract text while preserving the layout and header hierarchy. - Semantic Chunking: Instead of simple character limits, we implemented header-aware chunking. This ensures that a "Summary of Assets" table stays together in the vector space, preventing the AI from losing context.

- Metadata Tagging: Every chunk is tagged with its source file, creator, and "confidence score," allowing for 100% transparency in the AI's "Source Documents" window.

2. High-Performance Retrieval Logic

We implemented Hybrid Search with optimized k=4 retrieval. When an advisor asks a question, the system:

- Converts the query into a 3072-dimensional vector.

- Performs a similarity search in Pinecone across millions of records.

- Injects the top 4 most relevant "Knowledge Snippets" into a custom system prompt.

- Forces the AI to respond only based on the provided context, effectively eliminating hallucinations.

3. Desktop Single-Instance Security Loop

Security is paramount in wealth management. We built a cross-platform "Single Instance Lock" using ctypes (Windows) and fcntl (Unix) to ensure that the app cannot be launched multiple times on the same machine, preventing credential leakage and ensuring consistent session logging for compliance.

[IMAGE: BlackPod AI Assistant Interface showing RAG Logs and Chat Window]

Results: Turning Questions into Instant Insights

Within 30 days of rollout, the impact on the firm's research workflow was undeniable:

- Instant Knowledge Retrieval: Search time dropped from hours to milliseconds.

- 90% Reduction in Advisor "Wait Time": Clients now get answers to complex historical queries during the initial phone call.

- 100% Data Sovereignty: Every response is cited with a source document ID, allowing advisors to verify the data in the original PDF with one click.

- Seamless Onboarding: New hires became "experts" on the firm's history and research methodology in days, not months.

Trust & Authority

"BlackPod isn't just a chatbot; it's our firm's collective intelligence accessible in a single window. We've optimized our most expensive resource - our advisors' time - by letting the AI handle the data hunting."

- CIO, Blackstone Financial Services

FAQ: Technical Deep-Dive

How do you handle "hallucinations"? We use a Strict Grounding protocol. Our prompts instruct the LLM: "If the answer is not in the provided documents, state clearly that you do not know." By combining this with a high-accuracy vector database (Pinecone), we've reduced hallucination rates to virtually zero.

Is my data stored on OpenAI's servers? No. We utilize OpenAI’s Enterprise API, which explicitly guarantees that your data is never used for training models. Furthermore, your actual documents stay in your private cloud/local environment; only the mathematical "embeddings" are stored in the secure vector database.

Can it read handwritten notes or images? Yes. For firms with legacy paper records, we integrate a Vision-OCR step using GPT-4o-vision or Google Gemini, which "sees" and indexes handwritten signatures and annotated charts into the vector database.

Does it require a high-end GPU to run? No. Since the heavy lifting (LLM and Vector Search) happens in the cloud, the desktop application itself is extremely lightweight and runs smoothly on standard office laptops and desktops.

CTA: Ready to stop searching and start knowing? Request a BlackPod Demo for your Firm.